Mimicking the Human Brain

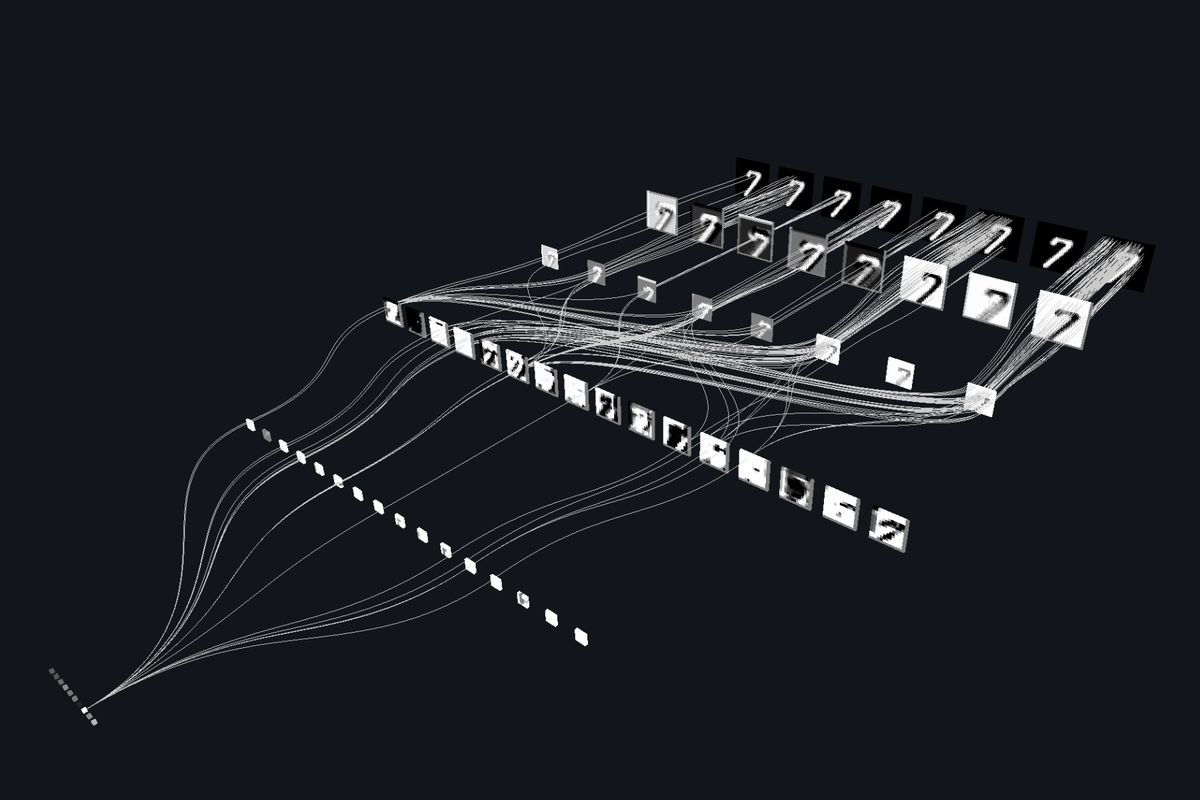

Neural networks are computing systems inspired by the biological neural networks that constitute animal brains. These systems "learn" to perform tasks by considering examples, generally without being programmed with task-specific rules.

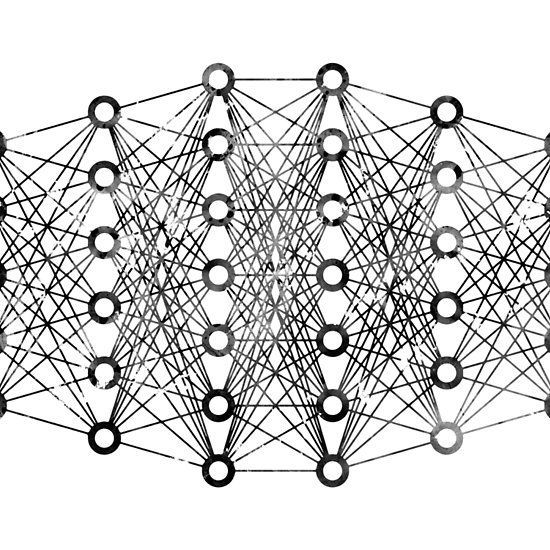

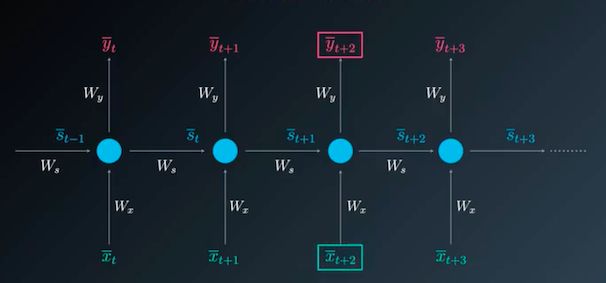

Just like our brains consist of interconnected neurons, artificial neural networks consist of nodes (artificial neurons) connected together. These connections have weights that adjust as learning proceeds, strengthening or weakening the signal between neurons.

The real power of neural networks lies in their ability to recognize patterns and relationships in data that are too complex for humans to detect or for traditional algorithms to process effectively.